Big O notation

Learn the full details of the Big O notation: the first of the top 3 notations used in algorithm analysis to represent performance.

11/09/2023

In the last article we got to know the asymptotic classes and we saw how important they are for the analysis of algorithms. In this article we will see the first of three notations used in computing to represent the asymptotic behavior of algorithms: the O notation (pronounced “Big O”).

If you haven’t read the article about asymptotic classes, click on this link and access the article where we teach you valuable knowledge on the subject.

In the most straightforward way possible, the O notation provides us with a simplified symbology to represent an upper performance bound for an algorithm. A maximum time limit that an algorithm takes to run:

- Instead of saying that the time complexity of the Bubble Sort algorithm (in the worst case) is equal to T(n)= 5n2-n+1, we say that its complexity is O(n2) (read “Big O of n squared”). We use the quadratic class n2 inside the notation parentheses to specify that the algorithm takes, at most, a quadratic time to run.

- Instead of saying that the time complexity of the linear algorithm is equal to T(n)= 4n+1, we say that its complexity is O(n) (read “Big O of n”). We use the linear class n inside the notation parentheses to specify that the algorithm takes, at most, linear time to run.

This is a bit of a rough explanation, but it does get you started to understand the meaning and usage of O notation.

Example for linear function

When we say that the linear function 4n+1 is O(n), we are saying that: “the asymptotic behavior of a linear function is equal to or greater than its“. It means to say: the function 4n+1 will never grow to the point of surpassing the linear behavior.

In this way, O(n) presents itself as an upper limit, and we know that the 4n+1 function will never show a growth behavior that exceeds this upper limit.

Example: for the function 4n+1, there is another function with linear behavior that limits it from above. Notice in the graph below that, for values of n>1, the function 4n+1 is overcome by another linear function (in blue).

Likewise, it is also correct to state that the linear function 4n+1 is O(n2), as the function 4n+1 will never grow to the point of surpassing the quadratic behavior. Therefore, O(n2) also presents itself as an upper asymptotic limit:

On the other hand, it would be wrong to say that the linear function 4n+1 is O(1), because the function 4n+1 will always grow to the point where it exceeds the constant behavior. In this way, O(1) does not present itself as an asymptotic upper bound:

In short:

4n+1 is O(n2), as it can be overcome (bounded from above) by a quadratic function.

4n+1 is O(n), because it can be overcome (bounded from above) by a linear function.

4n+1 is not O(1), as it cannot be overcome (bounded from above) by a constant function.

Example for quadratic function

When we say that the quadratic function 5n2-n+1 is O(n2), we are saying that: “the asymptotic behavior of a quadratic function is equal to or greater than its“. Meaning: the function 5n2-n+1 will never grow to the point of surpassing the quadratic behavior.

In this way, O(n2) is presented as an upper limit, and we know that the function 5n2-n+1 will never show a growth behavior that exceeds this upper limit.

Notice in the graph below that: for the function 5n2-n+1, there is another function (in blue) with quadratic behavior that limits it above:

Likewise, it is also correct to state that the quadratic function 5n2-n+1 is O(n3), as the function 5n2-n+1 will never grow to the point of surpassing the cubic behavior. Therefore, O(n3) also presents itself as an asymptotic upper limit:

On the other hand, it would be wrong to say that the function 5n2-n+1 is O(n), as the function 5n2-n+1 will always grow to the point of overcoming the linear behavior. In this way, O(n) does not present itself as an upper asymptotic limit:

In short:

5n2-n+1 is O(n3), because it can be overcome (bounded from above) by a cubic function.

5n2-n+1 is O(n2), because it can be overcome (bounded from above) by a quadratic function.

5n2-n+1 is not O(n), as it cannot be overcome (bounded from above) by a linear function.

Formal mathematical definition of Big O notation

Given two algorithm time complexity functions: f(n) and g(n). The formal mathematical definition states that:

- f(n) = O(g(n)) (read “f of n is Big O of g of n”) if there are positive constants c and n0, such that f(n) ≤ c.g(n) for all n ≥ n0.

This definition seems a little complicated to understand at first sight, but we will demonstrate it with some practical examples.

Mathematical resolution: 1st example

Let’s try to demonstrate by definition that 4n+1=O(n) (read “is Big O of n”).

First, we have to:

- f(n) = 4n+1

- g(n) = n

If, by mathematical definition, we need to demonstrate that there is a positive constant c and a positive n0 initial value, so that:

- f(n) ≤ c.g(n) for all n ≥ n0.

So, for this problem, we need to show that:

- 4n+1 ≤ c.n for all n ≥ n0.

In summary: we need to prove that, for all values of n greater than or equal to n0, the function 4n+1 is less than or equal to c multiplied by n.

To do so, the first decision we need to make is to choose a value for the constant c. In this case, let’s consider c equal to the value 5. See below a table that shows the inequality results for c=5 and for some values of n.

Note that, for n equal to 0 (zero), the function of the algorithm 4n+1 is greater than c.n. But when n is equal to 1, the values of c.n start to overcome the function of the 4n+1 algorithm. When we plot a graph for both functions, we visualize with greater clarity this exact moment of overcoming the c.n function:

This means that O(n) represents an upper asymptotic limit for the function 4n+1. Because, from a certain value n, c.n overcomes the 4n+1 function.

However, what really matters for the analysis of algorithms is that we were able to demonstrate our objective, that is, that there is a constant c (equal to 5) and an n0 (equal to 1), such that 4n+1 ≤ c.n for all the values of n greater than or equal to n0. In this way, it is proved that, in fact, 4n+1=O(n) (it is Big O of n).

Mathematical resolution: 2nd example

Let’s try to demonstrate by definition that 5n2-n+1=O(n2) (read “is Big O of n squared”).

First, we have to:

- f(n) = 5n2-n+1

- g(n) = n2

If, by mathematical definition, we need to demonstrate that there is a positive constant c and a positive n0 initial value, so that:

- f(n) ≤ c.g(n) for all n ≥ n0

So, for this problem, we need to show that:

- 5n2-n+1 ≤ c.n2 for all n ≥ n0

In short: we need to prove that, for all values of n greater than or equal to n0, the function 5n2-n+1 is less than or equal to c multiplied by n2.

Again, we need to choose a value for the c constant. In this case, we will also consider c equal to the value 5. Below is a table that shows the inequality results for c=5 and for some values of n:

Note that, for n equal to 0(zero), the function of the 5n2-n+1 algorithm is greater than c.n2. But when n is equal to 1, the values of c.n2 start to outperform the function of the 5n2-n+1 algorithm. When we plot a graph for both functions, we visualize with greater clarity this exact moment of overcoming the c.n2 function:

This means that O(n2) represents an upper asymptotic limit for the function 5n2-n+1. Because, from a certain value n, c.n2 overcomes the function 5n2-n+1.

However, what really matters for the analysis of algorithms is that we were able to demonstrate our objective, that is, that there is a constant c (equal to 5) and an n0 (equal to 1), such that 5n2-n+1 ≤ c.n2 for all values of n greater than or equal to n0. In this way, it is proved that, in fact, 5n2-n+1=O(n2) (it is Big O of n squared).

Mathematical resolution: 3rd example (wrong)

Let’s try to demonstrate by definition that 2n2-n=O(n).

First, we have to:

- f(n) = 2n2-n

- g(n) = n

If, by mathematical definition, we need to demonstrate that there is a positive constant c and a positive n0 initial value, so that:

- f(n) ≤ c.g(n) for all n ≥ n0

So, for this problem, we need to show that:

- 2n2-n ≤ c.n for all n ≥ n0

In short: we need to prove that, for all values of n greater than or equal to n0, the function 2n2-n is less than or equal to c multiplied by n.

At first glance, 2n2-n has quadratic behavior and c.n has linear behavior. In this way, it is clear that this proof is false, since a linear function (c.n) cannot represent an upper asymptotic limit for a quadratic function (2n2-n).

However, we will carry out a more in-depth analysis to understand the reason for this impossibility.

Let’s carry out some algebraic transformations in order to isolate the constant c in the inequality and understand its possible values:

The resulting inequality already shows us the impossibility of any constant c that makes it valid for large values of n (n→∞), because, regardless of how large the value of c is, the value of n will always exceed it.

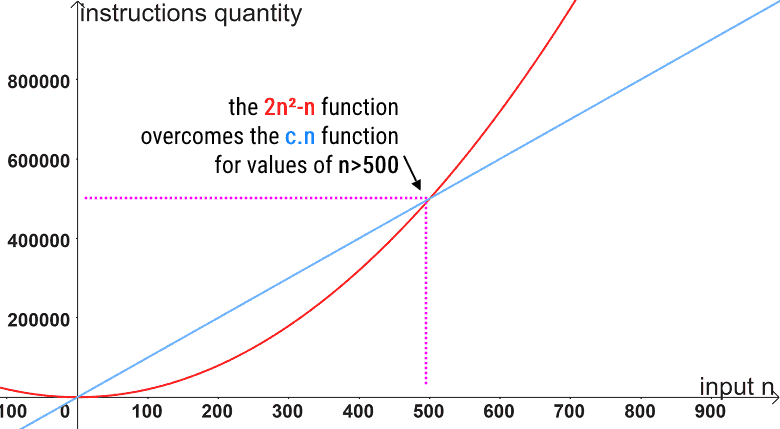

Below are some graphs that illustrate some scenarios of c.n being overcome by the quadratic function 2n2-n for different values of constant c.

In short: it doesn’t matter how big the constant c is; the 2n2-n function will always outperform the c.n function.

Therefore, there does not exist a constant c and a n0, such that 2n2-n ≤ c.n for all values of n greater than or equal to n0.

In this way, it is proved that, in fact, 2n2-n≠O(n) (read as “it is not Big O of n”).

Exercises

To demonstrate that you have a good understanding of the concept and the formal mathematical definition of Big O notation, solve the proposed exercises:

Exercises A

Try to prove mathematically that n2+800=O(n2).

Try to prove mathematically that 2n+10=O(n).

Try to prove mathematically that n2=O(n).

Try to prove mathematically that 7n-2=O(n).

Try to prove mathematically that n2+20n+5=O(n3).

Exercise B

Using the instruction counting technique, find the complexity formula T(n) of the algorithm below and then try to mathematically demonstrate that this formula found is O(n).

1.public static void func(int[ ] n){

2. for (int i=0; i<n.length-1; i++) {

3. for (int j=0; j<n.length-1; j++) {

4. n[j]=n[j]+i+j;

5. }

6. }

7.}

Continue your studies in this course and read the next article about the second asymptotic notation used to represent algorithm performance: the “Big Omega notation”.

David Santiago

Master in Systems and Computing. Graduated in Information Systems. Professor of Programming Language, Algorithms, Data Structures and Development of Digital Games.